In the latest Reuters Institute Digital News Report, less than four in ten people said that they trust most news most of the time (that’s 38% surveyed in January 2020, a fall of four percentage points from 2019)¹. Imagine the numbers today.

By any definition, it’s dishonesty.

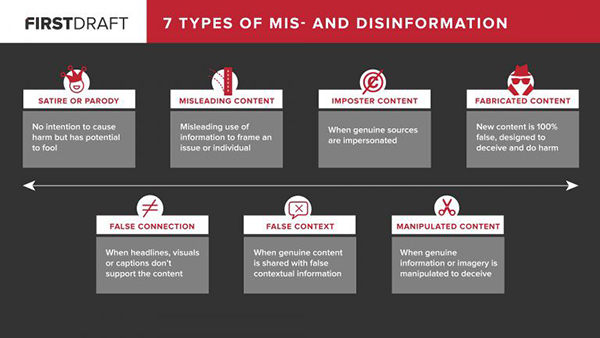

Claire Wardle of First Draft describes seven types of fake news³, ranging from accidental to insidious. The Council of Europe defines it as three “notions”: dis-information, mis-information, and mal-information⁴.

By any definition, no matter how nuanced the spectrum of intent, false news is dishonesty. And although the why behind the creation is integral to understanding the misinformation ecosystem, the what — simply the ability to identify content as true or false — is highly difficult for humans.

And what is most worrisome is the increasing global effect of false news over our political, economic, and social well-being.

The 7 types of fake news from First Draft, who works to protect communities across the world from harmful information

“Fake news is not a problem of potential swing voters being misled. It reflects the potential for people on the extremes to be trapped in echo chambers that aren’t just reinforcing their opinions, but providing them with false and misleading factual claims that seem to reinforce those opinions².”

Fake news? Don't blame the bot.

A study done by Soroush Vosoughi, Deb Roy, and Sinan Aral in 2018 found that “robots accelerated the spread of true and false news at the same rate, implying that false news spreads more than the truth because humans, not robots, are more likely to spread it.”

Their research found that falsehoods travel six times faster, on average, than truth. And although falsehoods outpaced the truth on every subject, the pace for politics was unparalleled².

When campaigns use incorrect imagery with the intent to sway and shock, as a new ad for President Trump’s reelection campaign did to reinforce the presence of “chaos & violence” in the US, they prey on our natural tendency towards sensationalism.

Exactly how the intensity of emotional reactions induced by a story influences human behavior requires more study, but we do know that content which arouses greater fear, disgust, and surprise compels individuals to share farther, faster, and deeper².

If a human brain can’t evaluate a story’s veracity, especially under emotional duress, two Danish growth startups, Abzu and Byrd, wondered if a robot brain could.

Turns out, Abzu’s proprietary QLattice® — a new artificial intelligence technology — through Byrd’s platform — a media marketplace for news outlets — can rate the truthiness of content very, very well.

This Facebook ad from the Trump campaign used an image of protesters and police officers in Ukraine from 2014 ostensibly as a depiction of US protestors.

Abzu’s proprietary AI identifies false news.

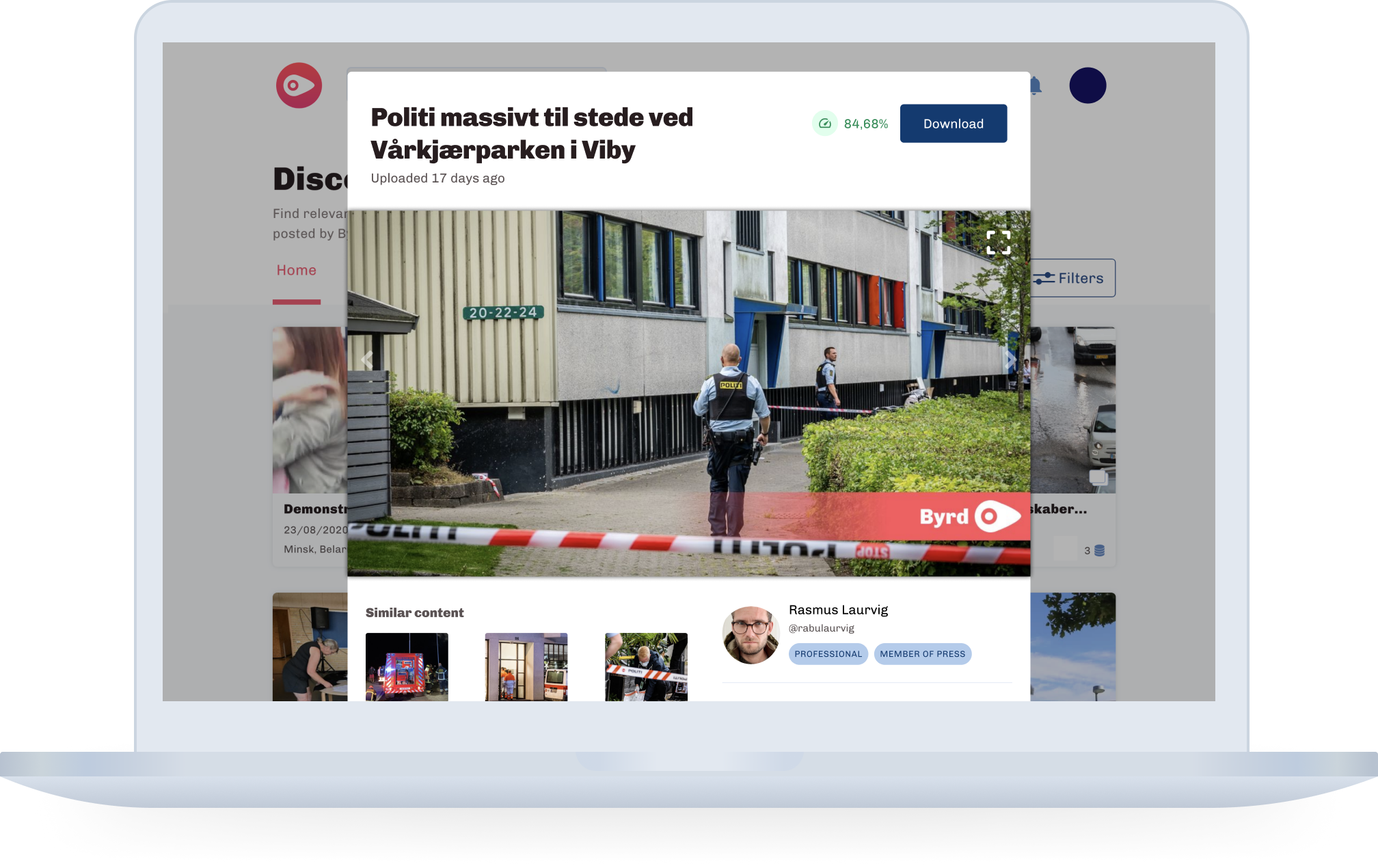

Byrd is a leading breaking news and content platform that connects news outlets with photographers and videographers in real time through their Byrd Breaking marketplace. Abzu is an artificial intelligence applied research startup with a pioneering, explainable and transparent machine learning technology inspired by quantum mechanics.

Together, these Danish startups have built a solution that automatically detects, and flags, falsified content in breaking news, rating media with a probability score of trustworthiness.

Abzu and Byrd are delivering near real-time verification on breaking news.

Each story and corresponding media undergo a machine learning verification process that captures the majority of false content, even as news breaks. If the artificial intelligence is uncertain, Byrd manually verifies content.

The transparent and explainable graph-based models from Abzu allow Byrd to precisely understand what inputs have been used to determine if content has been falsified. This means that Abzu’s transparent and explainable AI models allow Byrd to understand specifically why content was flagged as false, no matter the intent behind the falsification.

Once the what — the ability to identify content as true or false — has been taken out of our sweaty and clumsy human hands and put into a robot’s capable and steady algorithms, then the why behind false news creation can be explored.

This will empower journalists and the news media to explore and tell stories more thoroughly, accurately, and confidently. In a world of increasing instability, that is the kind of data-driven decision making that creates transformational industry leaders.

What a time to be a̵l̵i̵v̵e̵ dead inside.

Abzu is fighting false news with its proprietary AI.

It’s vital for media networks to ensure stories are true and trustworthy. Mortal extremes exacerbate our consumption of and attitude towards news media, and we don’t need another crisis or election to remind us how susceptible we are to conspiracies and misinformation.

False news is an increasing global “infodemic” precisely because journalists — professional rationalists in emotional storms — no longer govern journalism. Greater access to social media and other platforms used to circulate news provides greater access to a wider range of sources and “alternative facts”.

But Abzu and Byrd see a different future: one where journalists and the news media can identify the trustworthiness of content with conviction, and can navigate the news ecosystem to a more transparent and trustworthy place.

“Our photographers are a crucial source of information, and we need to identify anyone trying to bring distrust into the media flow. With Abzu, we have been able to identify the majority of falsified news and also understand the reasons why stories are being falsified. It has given us another tool to fight fake news — and this tool applies itself.” Mikkel Reymann Stephensen, co-founder of Byrd

When falsehoods are 70% more likely to be retweeted than the truth², how can humans preserve a news ecosystem that values and promotes accuracy? By introducing a little cold rationality to the environs; an artificially intelligent ally to save us from our own humanity.

References:

[1] Digital News Report 2020. Reuters Institute for the Study of Journalism, 2020, https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2020-06/DNR_2020_FINAL.pdf.

[2] The Spread of True and False News Online. Science, 2018, https://science.sciencemag.org/content/359/6380/1146.

[3] Fake News. It’s Complicated. First Draft, 2017. https://firstdraftnews.org/latest/fake-news-complicated/.

[4] Information Disorder: Toward an Interdisciplinary Framework for Search and Policymaking. Council of Europe report, 2017. https://rm.coe.int/information-disorder-report-november-2017/1680764666.