Why — and how — Abzu’s artificial intelligence is fighting false news.

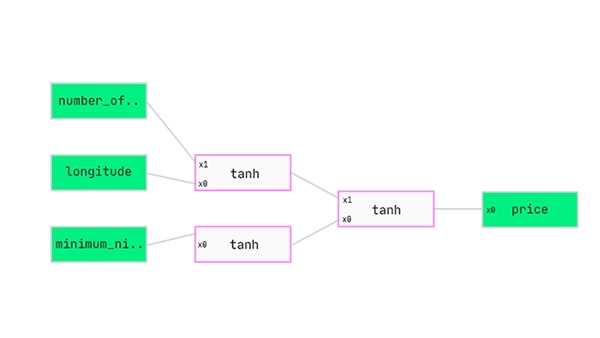

The explainable models from Abzu’s QLattice allow Byrd to precisely understand what inputs have been used to determine if content has been falsified.

How I learned to stop encoding and love the QLattice.

Curing your data preprocessing blues: Automatic handling of categorical data and scaling.

Accelerating scientific discoveries with explainable AI: A breast cancer example.

A 17 minute video about Abzu’s origins and an impactful application of explainable AI in life science: diagnosing breast cancer mortality.

Emerging Technologies for Healthcare: Internet of Things and Deep Learning Models.

“Emerging Technologies for Healthcare” begins with an IoT-based solution for the automated healthcare sector which is enhanced to provide solutions with advanced deep learning techniques.

Black-box medicine is taking over. Here’s an alternative.

The increasing application of black-box models gives rise to a range of both ethical and scientific questions.

arXiv

Symbolic regression outperforms other models for small data sets.

arXiv

An approach to symbolic regression using Feyn.

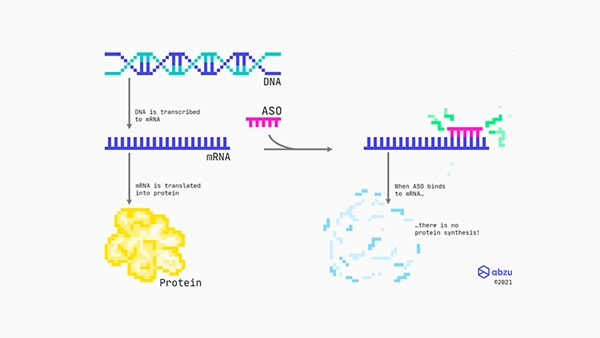

AI and nucleic acid-based medicine: can symbolic regression unite them?

Abzu researches into the best way to design antisense oligonucleotides (ASOs), including what features contribute to toxicity.

The QLattice®: A new machine learning model you didn’t know you needed

Data sciencey-sphere, I have big news: A radical new machine learning model has surfaced.

Opening the black box of AI with the QLattice.

Opening the black of AI with explainable AI (the QLattice). What does an explainable model look like? What does a plot look like?