All QLattice content.

The explainable AI that reveals the insights that fuel innovation.

Try the QLattice.

Experience the future of AI, where accuracy meets simplicity and explainability.

Models developed by the QLattice have unparalleled accuracy, even with very little data, and are uniquely simple to understand.

Select your favorite media.

Or simply browse all content about the QLattice.

Abzu is proud to announce its participation in the ELEGANT NORTH project, contributing with expertise in artificial intelligence and bioinformatics to develop cutting-edge algorithms for analyzing complex genetic data, with a focus on improving patient outcomes.

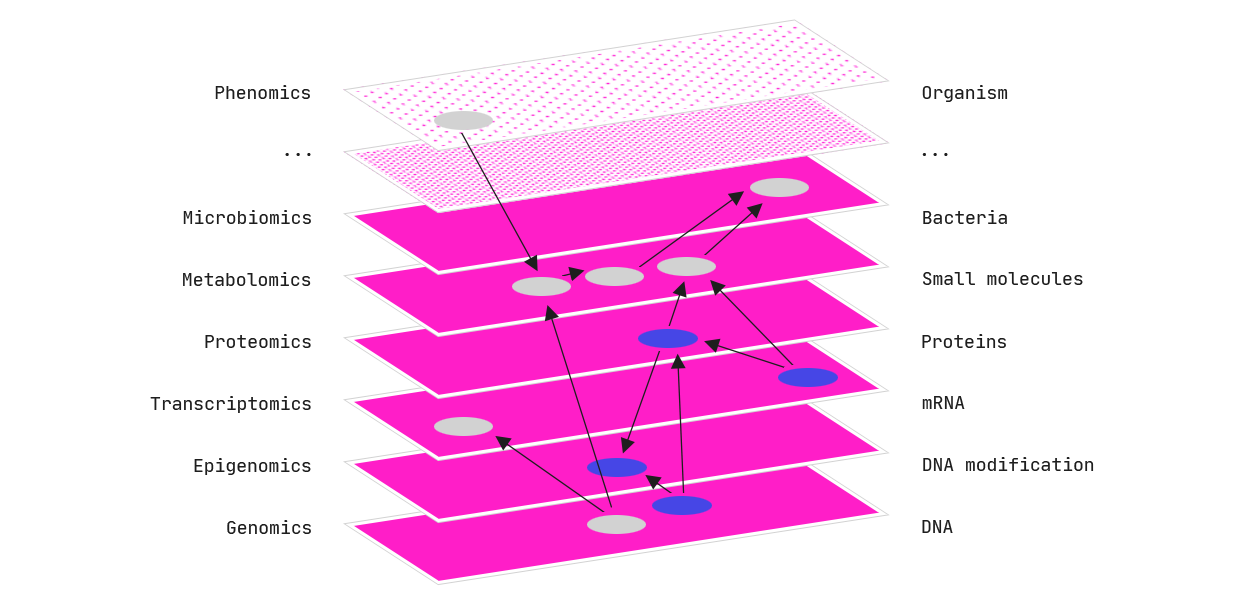

Multi-system-level analysis reveals differential expression of stress response-associated genes in inflammatory solar lentigo.

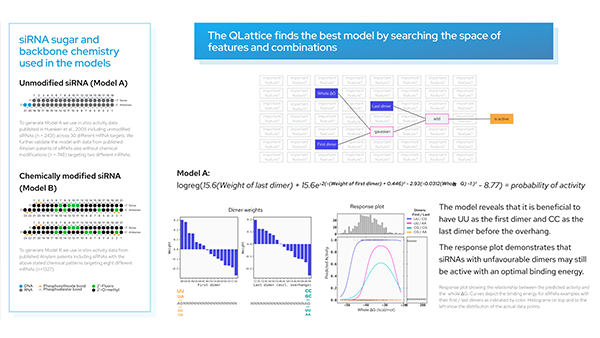

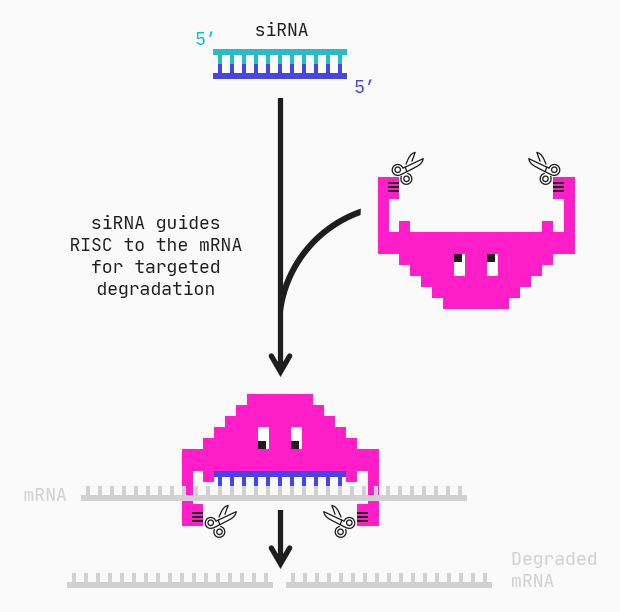

Here we use the QLattice® to generate siRNA activity models from publicly available data to create insights that can be used to design active siRNAs.

Part one of a two-part developer diary on building a stratified data splitter for Abzu's python library Feyn and the QLattice.

This session provides an in-depth look at several critical aspects of the AI Act from the perspective of developers.

Part one of a two-part developer diary on building a stratified data splitter for Abzu's python library Feyn and the QLattice.

Lykke Pedersen, Chief Pharma Officer at Abzu, considers the benefits of explainable AI and its applications within the pharma industry.

MYC targeting by OMO-103 in solid tumors: a phase 1 trial.

Casper Wilstrup on The AI and Digital Transformation Podcast.

Explainable artificial intelligence approaches for COVID-19 prognosis prediction using clinical markers.

Dr. Andree Bates and Casper Wilstrup discuss how Abzu’s QLattice explainable AI algorithm can be used to accelerate disease understanding, drug design, and insights in Pharma R&D.

Deep learning works like half a brain – without the other half, AI cannot meet growing expectations.

Lyt til Casper Wilstrup, CEO i Abzu, der ser, at next step er, at AI går fra et hjælpemiddel til direkte at overtage det menneskelige arbejde.

A discussion on AI, science, and philosophy, and Casper explains the symbolic AI behind Abzu's proprietary QLattice®.

Explainable machine learning identifies multi-omics signatures of muscle response to spaceflight in mice.

An interpretable machine learning model using the gut microbiome to predict clinical E. faecium infection in human stem-cell transplant recipients.

Explainable Occupancy Prediction Using the QLattice.

Biological research and space health enabled by machine learning to support deep space missions.

Oligonucleotides Therapeutics Society (OTS) 2023 poster and keynote.

Abzu, the Danish-Spanish startup that builds and applies trustworthy AI to tackle complex challenges for the world’s leading companies, secures ISO 27001:2022 certification, reinforcing its value proposition for explainable, resilient, and safe AI solutions.

An Explainable Framework to Predict Child Sexual Abuse Awareness in People Using Supervised Machine Learning Models.

Using LLM Models and Explainable ML to Analyse Biomarkers at Single Cell Level for Improved Understanding of Diseases.

Predicting weight loss success on a new Nordic diet: an untargeted multi-platform metabolomics and machine learning approach.

A novel QLattice-based whitening machine learning model of landslide susceptibility mapping.

Plasma proteomics discovery of mental health risk biomarkers in adolescents.

Analysis of the relationship between fetal health prediction features with machine learning Feyn QLattice regression model.

Artificial intelligence for diagnosis of mild–moderate COVID-19 using haematological markers.

Information fusion via symbolic regression: A tutorial in the context of human health.

Abzu® announced that the United States Patent and Trademark Office (USPTO) has issued Abzu Aps a patent number US 11,537,686 titled “Method of Deriving a Correlation” that protects the technology behind the pioneering QLattice algorithm.

Quantum lattices for early cancer detection through machine learning.

On Forward: An interview with Casper Wilstrup for an in-depth intro into Abzu and Abzu's proprietary trustworthy AI technology.

Predicting inpatient mortality in patients with inflammatory bowel disease: A machine learning approach.

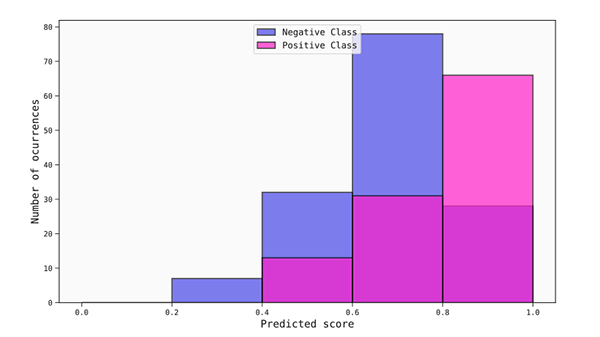

The simplest way to think about binary classification is that it is about sorting things into exactly two buckets.

Identifying interactions in omics data for clinical biomarker discovery using symbolic regression.

Combining symbolic regression with the Cox proportional hazards model improves prediction of heart failure deaths.

Symbolic regression analysis of interactions between first trimester maternal serum adipokines in pregnancies which develop pre-eclampsia.

Explainable “white-box” machine learning is the way forward in preeclampsia screening.

We're thrilled to announce that Gartner has named Abzu a "Cool Vendor" in AI for excelling in explainability, fairness, and trustworthiness.

An example of peptide drug development: Featurization and modeling using anticancer peptides.

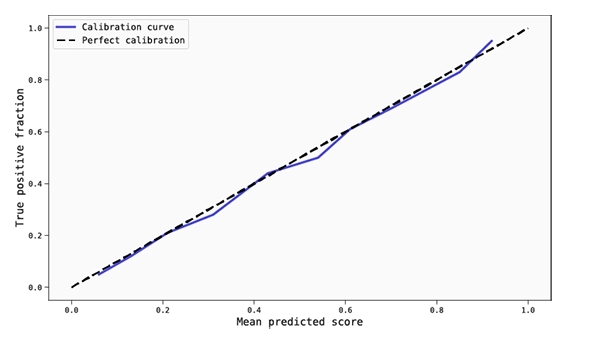

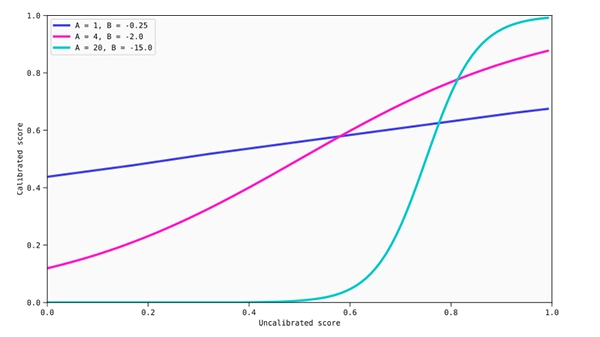

Let's study how well calibrated the QLattice models are, and to what extent calibrators can improve them.

What do machine learning model outputs represent? What if the predictions that we're making come with a future risk?

Calibrators are tools used to transform the scores generated by your models into (almost) real mathematical probabilities.

Women with Turner Syndrome are both estrogen and androgen deficient: The impact of hormone replacement therapy.

Explainable long-term building energy consumption prediction using the QLattice.

In under 2 mins: Why we have to understand what the decisions we make are based on and not blindly trust that a computer is right.

The QLattice, a new explainable AI algorithm, can cut through the noise of omics data sets and point to the most relevant inputs and models.

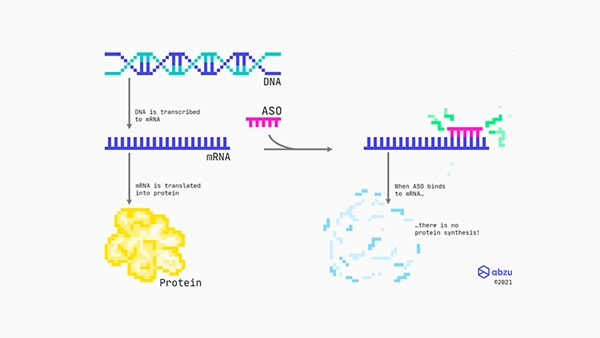

Let’s apply our repositioned selves and the QLattice in a very hot area of research: nucleic acid-based medicines. One particular type, RNA interference (RNAi), involves a process by which specific mRNAs are targeted and degraded.

The explainable models from Abzu's QLattice allow Byrd to precisely understand what inputs have been used to determine if content has been falsified.

Curing your data preprocessing blues: Automatic handling of categorical data and scaling.

A 17 minute video about Abzu's origins and an impactful application of explainable AI in life science: diagnosing breast cancer mortality.

“Emerging Technologies for Healthcare” begins with an IoT-based solution for the automated healthcare sector which is enhanced to provide solutions with advanced deep learning techniques.

The increasing application of black-box models gives rise to a range of both ethical and scientific questions.

Abzu researches into the best way to design antisense oligonucleotides (ASOs), including what features contribute to toxicity.

Data sciencey-sphere, I have big news: A radical new machine learning model has surfaced.

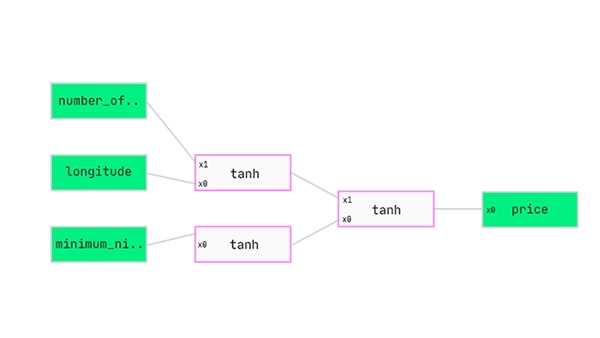

Opening the black of AI with explainable AI (the QLattice). What does an explainable model look like? What does a plot look like?

Do you think about machine learning? How about all the research put into self-driving cars or image recognition or natural language processing?

Subscribe for

notifications from Abzu.

You can opt out at any time. We’re cookieless, and our privacy policy is actually easy to read.